AVI - Flutter + Rive + Open AI

Hi everyone! 🖖

First of all, I want to thank you for your support in the last post Flutter + Rive + ML Kit.

It was incredible! Thank you all!

Today, I came to show you my new friend AVI, a cute robot developed with Flutter, Rive, and Open AI.

Also, I’m working for left a link where you can access play and test him. But AVI needs some tests more 😅.

The principal idea of this post is not to show the code but gives you an idea of how I got to the current result.

I will show some code. It is unavoidable 🤓.

AVI was borns after the last post. I thought, “I want to take this one level further”. So, I created a new character on Rive and gave it a voice.

For this, I used flutter_tts.

TEXT TO SPEECH

The essence was to put some text and have AVI say it in a funny voice. Lucky for me, the documentation of the package was clear. I just needed to make a few changes and get a unique instance of FlutterTts, with all the voice features I want, for all my code.

How can I do that? 🤔 With a provider.

So, the voice now has:

locale: Because I’m Argentinian and I want to AVI speak Spanish 😄. pitch: For the funny/cute part. A voice: This was the hard part because I couldn’t find what each voice sounds like, so… I tried them one by one. Now, the speak method. I need a method that receives a sentence and executes my instance of FlutterTts.

Ok, ¡AVI is talking now! 🎊🎉

The next step was pretty obvious to me. I wanted to talk with AVI, not just write a text and listen to how he reproduces it.

So, I was going to need two things, for him to listen to me and the funniest and most beautiful thing, for him to understand and respond to me.

But… How can I do this? 😅

Well, first to first, AVI needs to listen to me. For this, I used speech_to_text.

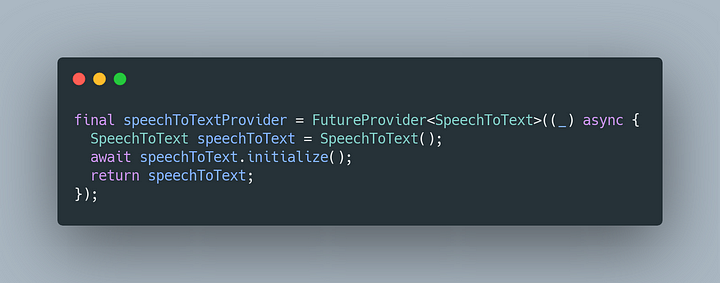

SPEECH TO TEXT

Here, the idea is clear. I need to say something and translate that into a string.

Like with flutter_tts, I needed a unique instance of the speech_to_text, and access to it from any part of my code.

¡YES, provider! 😎

Then, I need the method where all magic occurred.

So, I configured some parameters in the listen:

localeId: Like in the flutter_tts, I’m going to speak Spanish, so I need that speech-to-text to understand Spanish. Getting the AVI locale I assume that the user language is the same as the language that AVI speaks. pauseFor: This was necessary because, on the web, the package doesn’t work perfectly. I talk and never stop to listen, at least that I stopped with a button.

¡Great, AVI can listen to me now! ✅

Finally, the brain 🧠.

OpenAI — GPT-3

Incredibly, this was the easy part of the process.

For this part, I only needed access to the API of OpenAI.

I don’t want to extend myself a lot with this part because I’m not joking OpenAI is super easy to use🤯.

Using the completion part of the API, I configured a prompt that gives a story and personality to AVI.

Then, I attach the message from my speech and wait for the response of the API.

When the OpenAI response, I put the response text on the first method of FlutterTts.

And that’s all!

Thanks for getting here 🤟.

Like I said, coming soon, I’m going to provide a link where you can learn a little bit more about AVI, but most importantly, where you can play and test it.

Thanks again, and see you in the next post 👋.

LIBRARIES USED

- For .riv animation implementations, rive.

- For state handling, flutter_riverpod.

- For speech-to-text, speech_to_text.

- For text-to-speech, the package flutter_tts.

- For HTTP clients, the package dio.

...

If you like the post, help us to continue uploading content BUY ME A COFFE